Free LLM Playground

Try top LLM models from the best AI providers (ChatGPT, Gemini, Claude, and more) in a single LLM playground for free. Share results in one click. No signup, no API keys.

Why Free LLM Playground?

Start instantly.

No signup. No API keys.

Just type and run.

Compare models.

Across OpenAI, Anthropic, Gemini & open-weights.

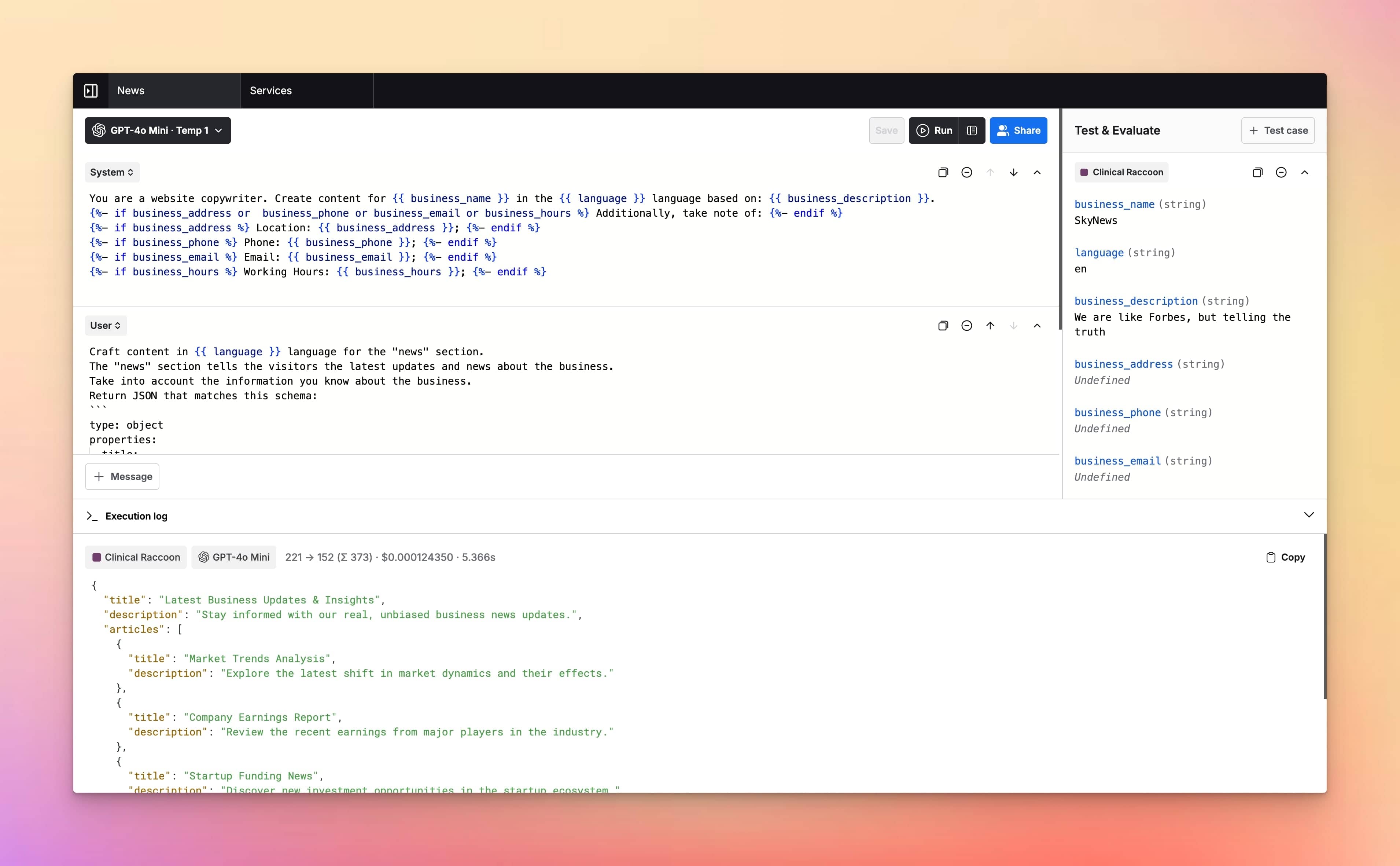

Run with variables.

Jinja2 templates and inputs for real-data testing.

Share & replay.

Public links, transcripts, and cURL/JS export.

Private by default.

We don't train on your prompts and data.

Scales with you.

Buy more volume when you need.

Why Us over other LLM Playgrounds?

Other playgroundsFrom VC-baked companies

LLM PlaygroundPowered byLangFast

Explore All Features

Supported AI Models

- GPT-5

- GPT-5 Mini

- GPT-5 Nano

- GPT-5 Nano

- GPT-4.1

- GPT-4.1 Mini

- GPT-4.1 Nano

- GPT-4o

- GPT-4o Mini

- O1

- O1 Mini

- O3

- O3 Mini

- O4 Mini

- GPT-4 Turbo

- GPT-3.5 Turbo

- Claude AI Models (soon)

- Gemini AI Models (soon)

- Model Fine-tuning (soon)

Model configuration

- Custom System Instructions

- Reasoning Effort Control

- Stream Response Control

- Temperature Control

- Presence & Frequency Penalty

User Interface

- Customizable Workspace

- Wide Screen Support

- Hotkey & Shortcuts

- Voice Input (soon)

- Text-to-Speech (soon)

Playground Experience

- Prompt Library

- Prompt Templates & Variables

- Jinja2 Templates Support

- Upload Documents (soon)

- Language Output Control

- Parallel Chat Support

Prompt Management

- Prompt Folders

- Edit & Fork Prompts

- Prompt Versioning

- Upload Documents (soon)

- Share Prompts

Cost & Performance

- Cost estimation

- Token usage tracking

- Context length indicator

- Max token settings

Security and Privacy

- Private by Default

- API Tokens Cost Estimation

- No chats used for training

- Web Search & Live Data (soon)

Integrations

Plugins

- Custom Plugins (soon)

- Image search plugin (soon)

- Dall-E 3 (soon)

- Web page reader (soon)

Frequently Asked Questions

What is a free LLM playground?

A free LLM playground is a browser UI to test large language models instantly—no setup, no API keys—built for product managers, engineers, data scientists, and writers who want fast prompt experiments without touching SDKs or billing.

How does a free LLM playground work?

Type a prompt and stream a response, then switch or compare models side-by-side; you can save/share a link, export to cURL/JS/JSON, and use templates or variables, with clear free caps (e.g., 50 chats/day) and optional Pro or Team plans to lift limits.

Which large language models can I try in the free playground?

You get access to all major models for the top LLM providers: OpenAI/GPT (e.g., GPT-4o family or lighter variants), Anthropic/Claude (e.g., Haiku/Sonnet tiers), Google/Gemini (e.g., Flash/Pro variants), Meta Llama (e.g., Llama 3.x, 8B/70B), Mistral/Mixtral, an others.

Do I need an API key?

No. You can start immediately. Keys are optional for power users.

Is it actually free? What's the catch?

Yes. You get 50 chats/day with fair-use throttling. No signup, no card required. We hope that eventually you will get enough value to transition to a Pro or Team workspace.

Which models are free?

See "Supported models." Some premium models may require an upgrade to Pro workspace.

How fast is it?

We stream tokens through a tiny proxy layer to ensure you can use it without your own API keys. Typical first token time is low fraction of a second. Speed varies by model/load.

What's the context window?

Depends on the model (e.g., 8K–200K tokens). We show it next to each model.

Can I upload files or images?

Yes, as long as they are supported by the model itself.

Can I compare models side-by-side?

Yes. You can open as may chat tabs as you want see multiple models answer the same prompt.

Can I save or share chats?

Yes. Use "Share" button to manage sharing permissions. You can create public URLs or share access with specific email addresses.

Do you train on my data?

No. We don't use your prompts for training. You control retention in Settings.

Where is my data processed?

We route to model providers; see the Data & Privacy page for regions and details.

Can I use outputs commercially?

Generally yes, subject to each model's terms. We link those on the model picker.

What happens after I hit the free limit?

Wait for the daily reset or upgrade to Pro workspace to add more volume or your own keys.

Can I bring my own API keys?

Yes, you can if you're on a Team plan. Just reach out to us, and I'll make it happen.

Is there a team plan?

Yes: shared workspace, unlimited requests, bring your own keys, SSO, and centralised billing.

Do you have an API?

Yes. Reach out to us to get more information.

Can I integrate the free LLM playground with my app or workflow?

Yes—share read-only links, export cURL/JS/JSON to replicate calls, and use Bring Your Own Keys (BYOK) to keep the UI while routing costs to your provider.

What's the difference between a free LLM playground and paid LLM APIs?

A free LLM playground is point-and-click for quick evaluation with limited quotas, while paid LLM APIs provide programmatic control, higher throughput, predictable limits, and SLAs for production—use the playground to find prompts/models, then ship with APIs.

How does this compare to OpenAI Playground or Hugging Face Spaces?

As an OpenAI Playground alternative and Hugging Face Spaces alternative, this free LLM playground focuses on instant multi-provider testing (no keys to start), consistent UI, side-by-side comparisons, share links, exports, and transparent free limits in one place.